Introduction – Why baby‑NICER?

We are building baby‑NICER because the hardest problems of this century—climate resilience, equitable prosperity, sustainable cities—will be solved not by lone geniuses but by diverse teams that deliberate and act together (Rock & Grant 2017 ; Woolley et al. 2010 ). At Towards People we champion more democratic, transparent and collaborative modes of work; recent evidence shows that well‑designed AI tools can amplify exactly that sort of collective intelligence (Fernández‑Vicente 2025 ). Baby‑NICER is our first concrete step in that direction—a modular agent that lives inside the software teams already use, remembers what matters, and grows in understanding alongside its human collaborators. Here is an 8 minute video of our progress in April 2025 to get @babyNICER working with us over Slack.

Here is a NICER SYSTEMS project update from Interrupt Lang* London Meet-Up 06/06/2025, regarding the integration of the SQL agent (the first in the Swarm)

The story so far unfolds in three deliberate moves

- Put large language models where the team lives.

Our starting point was pragmatic: bring ChatGPT‑class models directly into Slack so every teammate can query, brainstorm or draft inside a shared thread (OpenAI × Slack 2024 ). Will Fu‑Hinthorn’s starter repository—a React agent wired to Slack—became the scaffold I forked into baby‑NICER. - Give the agent a human‑like memory.

A chatbot that forgets after a handful of turns is a party trick, not a partner (Karimi 2025 ). LangMem supplied the conceptual and technical scaffold—semantic, episodic and procedural memories—so the agent can learn over time (LangChain 2024 ). We persist those memories in BigQuery, which is cost‑efficient, SQL‑friendly and can surface straight into Google Sheets for human inspection (Google Cloud 2025a ; Google Cloud 2025b ). The same interface can be swapped for Snowflake or another warehouse with minimal code changes. - Close the learning loop and add specialists.

Next come continuous prompt‑optimisation (Zhang et al. 2025 ) and a growing cast of focused agents:- a dbt / SQL agent to keep data pipelines clean and organise trusted tables and views (dbt Labs 2025 );

- an Apache Superset agent that turns those tables into charts on demand (ASF 2025 );

- social‑listening agents that watch the wider discourse; and

- ultimately a “Habermas machine” that nudges conversations toward inclusivity and reason‑giving (Tessler et al. 2019 ).

- a dbt / SQL agent to keep data pipelines clean and organise trusted tables and views (dbt Labs 2025 );

When these modules knit together, the project will graduate from baby‑NICER to NICER—the Nimble Impartial Consensus Engendering Resource.

What you will find in the rest of this post

- A guided tour of the current architecture: how I started with a Slack bot and chiseled it into a memory‑driven LangGraph agent, using the langmem tools and a new memory store that I built on top of existing memory and vector store classes.

- Under the hood with BigQueryMemoryStore: inheritance chains, patched vector stores, and a plain‑English primer on embeddings and vector search.

- Comparative notes on multi‑agent frameworks—Swarm, CrewAI, LangManus—so you can see why I made the choice of using Swarm for the NICER system.

- Reflections from AI history and philosophy: Russell & Norvig on knowledge, Kurzweil on pattern memory, Chalmers and Searle on why none of this is consciousness, and Floridi on the ethics of remembering.

- The road ahead: how modular agents will help flesh and bone teams—starting with community‑vitalisation and urban‑farming projects we are prototyping—become more cohesive, more inclusive and more resilient.

If AI is to serve humanity, it must amplify our capacity to understand one another and act in concert. Think of baby‑NICER as the prototype of an AI colleague whose sacred job it is to create a culture of joy, inclusion and cohesion.

What is “agentic AI”?

At its simplest, an agent is “anything that perceives its environment and acts upon it,” the canonical definition given by Russell & Norvig and widely used across AI research . Modern agentic AI systems build on that foundation but add three practical pillars:

| Pillar | In practice | Why it matters for baby‑NICER |

| Autonomy | The agent can decide when to call external tools or ask follow‑up questions without explicit step‑by‑step instructions | Frees the human team from micro‑managing every action. |

| Tool use / function calling | LLMs output JSON “function calls” that trigger code, APIs or databases | Lets the slack‑based agent run SQL, create charts, or store memories. |

| Memory | Short‑term context and long‑term stores (semantic, episodic, procedural) | Converts a forgetful chatbot into a learning teammate with super human memory. |

The industry press sometimes frames this evolution as the “agentic era” of AI —systems that do more than chat: they act on behalf of users, coordinate with other agents, and remember what they learn.

The ReAct pattern: reasoning and acting in a loop

Traditional language‑model prompts either (a) reason—produce a chain‑of‑thought—and then stop, or (b) act—call a tool—without showing their thinking.

ReAct (Reason + Act) interleaves the two :

- Thought: the LLM writes a short reasoning trace (“I should look up today’s revenue”).

- Action: the trace ends by calling an available tool (get_kpi(“revenue”, “today”)).

- Observation: the tool returns data to the model.

- Next Thought / Action … until the task is solved or a termination condition is met.

This synergy improves factuality and task success because the model can gather information mid‑reasoning rather than hallucinate.

How LangChain implements ReAct

LangChain wraps that loop in a ready‑made ReAct Agent (sometimes shown as create_react_agent) :

LLM ↔ LangChain Agent

↻ (Thought → Tool → Observation)*

Developers (or as part of a graphical user interface perhaps non developers alike) supply:

- an LLM (e.g. GPT‑4, DeepSeek R1, Qwen2.5-Omni-7B ),

- a list of tools (functions with JSON schemas),

- an optional custom prompt.

The framework takes care of parsing the model’s “Thought/Action” lines, executing the action, and feeding the observation back for the next turn.

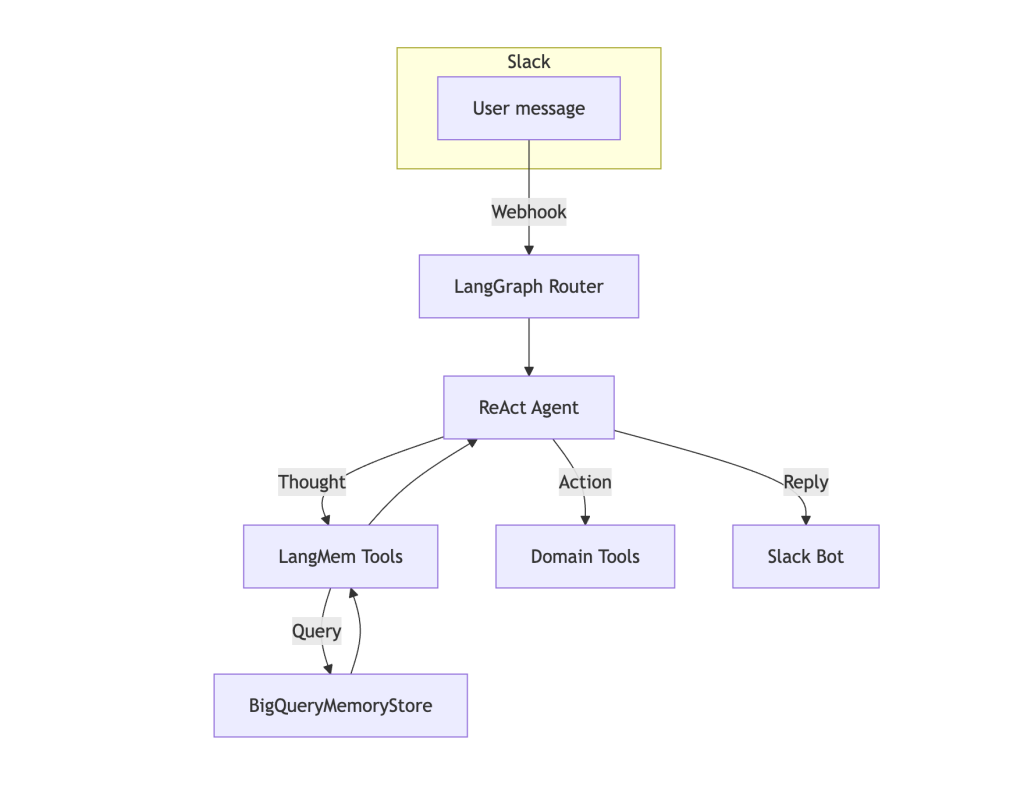

From ReAct to Slack: the starter repository

Will Fu‑Hinthorn’s langgraph‑messaging‑integrations repo glues that agent loop to Slack events: a message arrives, LangGraph routes it through a ReAct agent, and the reply is posted back to the channel .

I forked that codebase as the launch‑pad for baby‑NICER, added LangMem tools, and swapped in BigQuery as the vector store. The result is a Slack “teammate” that can reason, call functions, and—thanks to memory—learn over time.

Why this matters for the rest of this post

- Agentic framing: Memory only matters if the AI is autonomous enough to reuse it. ReAct provides that autonomy.

- Tool interface: LangMem’s manage_memory and search_memory methods appear to the agent exactly like any other ReAct tool, so storing or recalling knowledge is just another action in the loop.

- Scalability: Because ReAct is tool‑agnostic, we can later plug in the SQL agent, charting agent, or social‑listening agent without changing the core dialogue pattern.

With those concepts in place, we can now dig into the three‑tier memory model—semantic, episodic, procedural—and see how BigQueryVectorStore turns them into a persistent collective memory store.

Why an External Memory When the LLM “Already Knows So Much”?

Large language models come with an impressive stock of facts. Their weights literally memorise patterns from pre‑training, but that baked‑in knowledge is static, generic and sealed

(you cannot append or edit it without an expensive re‑train) . In practice that means three gaps:

- Fresh or proprietary information is invisible.

Yesterday’s sprint retro, today’s sales numbers, a new regulatory rule—none exist inside the frozen model weights. External memory lets the agent capture such post‑training facts the moment they appear . - Team‑specific context is easily lost.

An LLM will not reliably recall your design principles, a colleague’s preferred file format, or a decision made last quarter; those shards of context are either too niche to be in the pre‑training corpus or too recent to be encoded. Storing them as semantic or episodic items means baby‑NICER can resurface them on demand . - Hallucinations rise when the model guesses.

When asked for something it was never trained on, the model will still predict tokens—often inventing references or numbers . Retrieval‑augmented generation mitigates this by replacing guessing with lookup: the agent queries its BigQuery vector store, fetches the most relevant chunk, and then conditions the LLM’s answer on that ground truth .

In short, LangMem’s external stores turn a brilliant, but embarrassingly forgetful, polymath into a continuously learning teammate. The LLM supplies broad linguistic competence; the memory supplies up‑to‑the‑minute, organisation‑specific, and auditable knowledge it can’t otherwise keep.

Integrating Long‑Term Memory with LangMem

Thus it was central to baby‑NICER’s design to weave in an explicit, cognitively‑inspired long‑term memory layer. The open‑source LangMem library offers three complementary memory abstractions—semantic, episodic and procedural—a triad first formalised in cognitive psychology (Tulving 1972) and later refined to distinguish “knowing that” from “knowing when” and “knowing how” (Cohen & Squire 1980) . In baby‑NICER these stores are not abstractions; each is a concrete tool pair—manage_*_memory and search_*_memory—that the agent can invoke while chatting, thanks to LangMem’s helper functions (LangMem Docs 2025) .

1 Semantic memory – “knowing that”

Semantic memory holds facts the agent acquires after its LLM pre‑training cut‑off: company lore, user preferences, policy snippets. LangMem represents each fact with a Pydantic Fact model and persists it through a SemanticMemoryStore, which in our implementation is backed by BigQuery vector search (Google Cloud 2024) . Every fact is embedded, stored in a BigQuery table, and instantly searchable with cosine‑similarity SQL (LangChain BigQuery VectorStore source) . Because the store is external, the knowledge base grows continually, unhindered by the frozen LLM weights—a best‑practice echoed in retrieval‑augmented generation research (Guu et al. 2020; Izacard & Grave 2021) .

2 Episodic memory – “remembering when”

Episodic memory encodes significant interactions as Episode objects with fields such as observation, thoughts, action and result. This design mirrors psychological definitions of episodic recollection as time‑stamped personal events (VerywellMind 2024) . If the agent walks through a multi‑step troubleshooting sequence, the entire trace is saved; later, a similarity search can retrieve that episode to guide current reasoning. The result is genuine learning‑from‑experience, not merely fact recall—just what case‑based‑reasoning theorists advocate for adaptive AI (Kolodner 1992) .

3 Procedural memory – “knowing how”

Procedural memory stores reusable skills: each Procedure records a task name, pre‑conditions and ordered steps. In humans, such “how‑to” knowledge is implicit and resilient (Cohen & Squire 1980) ; in baby‑NICER it is explicit, so the agent can inspect or refine its own playbooks. A ProceduralMemoryStore persists these JSON recipes via the same BigQuery backend, meaning a freshly spun‑up instance can adopt the accumulated best practices of its predecessors.

Memory tools in action

LangMem auto‑generates two tools per store (LangMem API Docs 2025) :

| Memory type | Write tool | Read tool | Example call in dialogue |

| Semantic | manage_semantic_memory | search_semantic_memory | “Remember that the quarterly OKR owner is Maya.” |

| Episodic | manage_episodic_memory | search_episodic_memory | “Recall what we tried the last time the ETL failed.” |

| Procedural | manage_procedural_memory | search_procedural_memory | “Save these steps as the standard ‘on‑call handover’ guide.” |

The tools accept a namespace template—in our Slack deployment the key is typically (workspace_id, channel_id, user_id)—so memories are neatly partitioned by team or thread. Storage and retrieval run through LangGraph’s async store interface, so they don’t block the chat loop.

From stateless chatbot to context‑aware collaborator

By exploiting those three stores during ReAct reasoning, baby‑NICER can:

- Personalise: “Last week you said you prefer dark‑mode dashboards.” (semantic recall)

- Generalise: reuse a previous pipeline‑debug episode to fix a new but similar breakage (episodic reuse).

- Execute: follow a saved procedure for rotating GCP credentials step‑by‑step (procedural recall).

Together the stores give the agent a modular cognitive architecture analogous to human memory taxonomies (Tulving 1972) , enabling richer and safer behaviour than any stateless LLM prompt alone.

By integrating these memory capabilities, baby-NICER moves beyond a stateless chatbot. It personalizes interactions – recalling what a user said last week (episodic memory), remembering facts from documentation it ingested (semantic memory), or following a multi-step plan it formulated earlier (procedural memory). In essence, LangMem gives baby-NICER a cognitive architecture reminiscent of human memory systems. Just as psychology distinguishes semantic “knowing that” from episodic “remembering when” and procedural “knowing how,” this agent has separate channels for each, enabling richer, context-aware behavior .

BigQueryMemoryStore: Extending LangChain for Scalable Memory

Safely skip this section, if you’re not interested in the technical details.

To implement long-term memory, baby-NICER needs a place to store vector embeddings of content (for semantic search) along with structured data. The solution was to use Google BigQuery as a vector database, by extending LangChain’s vector store interface. Baby-NICER introduces a custom BigQueryMemoryStore class, which builds on LangChain’s BigQuery vector support in the community extensions.

Under the hood, BigQueryMemoryStore combines several layers of abstraction:

- It inherits from AsyncBatchedBaseStore, a LangGraph base class for asynchronous storage operations. This base provides the standard async methods like aput (asynchronous put) and aget (asynchronous get) to store and retrieve items in a namespace/key-value fashion, possibly with vector indexing . By inheriting this, BigQueryMemoryStore can be used seamlessly as a backend store for LangMem’s tools, which expect an async store.

- It uses a BigQueryVectorStore internally. LangChain’s BigQueryVectorStore (from the langchain-google-community package) is a vector store implementation that utilizes BigQuery’s native vector search capabilities . BigQuery recently introduced a feature to index and search embeddings using a VECTOR_SEARCH function . The BigQueryVectorStore class in LangChain is designed to leverage that – it stores documents with an embedding vector in a BigQuery table, and can query for nearest neighbors via BigQuery SQL. In baby-NICER, a subclass PatchedBigQueryVectorStore overrides some methods (like add_texts_with_embeddings) to better handle JSON and structured data insertion . For example, if the content to store is a dict (structured memory item), the patch ensures it gets properly serialized as JSON string or record in BigQuery .

- The BigQueryMemoryStore itself doesn’t directly subclass BigQueryVectorStore; instead, it composes one. The from_client classmethod creates a PatchedBigQueryVectorStore with the given BigQuery client, dataset/table names, and embedding model . It then instantiates BigQueryMemoryStore(vectorstore=…, content_model=…) wrapping that vector store . This design allows separation of concerns: the vector store handles low-level operations (embedding, upsert, similarity search) in BigQuery, while the memory store provides the higher-level interface LangMem expects (namespaces and typed content).

- BigQueryMemoryStore uses the content schema to enforce types. When baby-NICER calls aput to save a memory, it passes in a dict that includes a “content” field (plus metadata like namespace) . The memory store’s aput will normalize that content: if it’s not already a dict matching the Pydantic model, it will wrap it (e.g. put a raw string into a {content: …} dict) . It then JSON-serializes this content to a string for embedding purposes . A LangChain Document is created with page_content as the JSON string (so that the embedding model will vectorize the entire content) and metadata containing the namespace and structured fields . This document is added to the vector store with add_documents() , which under the hood calls BigQuery to insert a new row with the text’s embedding. Conversely, on retrieval via aget, the store fetches the document by ID from BigQuery, then reconstructs the Pydantic object from the stored JSON string before returning an Item . This ensures that when baby-NICER retrieves a memory, it gets it back in a nicely structured form (e.g., a Fact or Episode object in the Item.value) rather than a raw blob.

This hybrid approach (LangChain + BigQuery) is powerful. It means baby-NICER can scale its memory: BigQuery can handle millions of records and perform similarity search efficiently using vector indexes . By inheriting asynchronous store behavior, the agent can store and fetch memories without blocking, which is important when multiple agents or users are interacting. The design also cleanly separates the vector search logic from the agent logic – from the agent’s perspective, it just calls a tool to “search episodic memory,” and under the hood that becomes a BigQuery VECTOR_SEARCH query returning relevant snippets.

In summary, BigQueryMemoryStore extends the LangGraph/LangChain infrastructure to use a cloud database as the long-term memory backend. It inherits the interface of a memory store (from AsyncBatchedBaseStore) and plugs in a vector store (BigQuery) for actual data operations, marrying the two. The result is a custom memory module that fulfills the promises of LangMem’s design (structured, typed memory with vector retrieval) at cloud scale. It’s a neat example of using composition and inheritance in tandem: inheritance to fit the expected store pattern, and composition to leverage existing BigQuery integration .

How Vector Search and Embeddings Power Memory Retrieval

Embeddings turn statements of meaning into lists of numbers; vector search then turns those lists of numbers back into linguistic meaning. Together they are the engine that lets baby‑NICER recall the right memory at the right moment—without relying on brittle keyword matches.

Embeddings – turning text into maths

An embedding is a high‑dimensional list of numbers that captures the meaning of a text span (IBM 2024) . A sentence such as “Schedule a meeting for next week” becomes a 1 536‑length vector when encoded by either OpenAI’s text‑embedding‑3‑small or a comparable open‑source model (Stack Overflow 2023). More advanced models have higher dimensional representations which means that they can pick up more subtlety in meaning. In such an embedding space, semantically similar sentences land near one another—distance is measured with metrics such as cosine similarity (Lewis et al. 2020).

Each time baby‑NICER stores a new Fact, Episode or Procedure, it first calls its chosen embedding model. The model returns a vector, which is stored—together with the raw JSON—in a BigQuery table (Google Cloud 2025a). Because the vector lives outside the frozen LLM weights, the knowledge base keeps growing long after training day. Note that it only takes one line of code to replace the embedding model with another one.

Vector search – finding the nearest meaning

When the agent invokes search_semantic_memory, BigQueryMemoryStore embeds the query the same way, then sends a SQL call to BigQuery’s VECTOR_SEARCH function (Google Cloud 2025b) . That function performs an Approximate Nearest‑Neighbor (ANN) lookup over a vector index, returning the top‑k closest embeddings in milliseconds—even across millions of rows (Google Cloud 2025c) . Because distance in embedding space correlates with semantic relatedness, a query about “annual revenue” reliably surfaces a stored fact about “yearly sales,” even though the wording differs.

This pattern is the heart of Retrieval‑Augmented Generation (RAG): ground an LLM’s answer on external facts fetched by similarity search (Lewis et al. 2020) .

The memory‑retrieval pipeline in baby‑NICER

query text → embed → VECTOR_SEARCH → JSON memory → agent prompt

- Embed query – 1 536‑D vector via open‑source model.

- Search – VECTOR_SEARCH finds nearest neighbours in BigQuery.

- Return – LangMem converts rows into Item objects.

- Augment – the agent inserts the memory snippet into its ReAct context before generating a reply.

Because the pipeline is abstracted behind LangMem’s search_memory_tool, every store—semantic, episodic, procedural—benefits from the same mechanism (LangMem Docs 2025) .

Why it matters

Without vector retrieval an LLM is trapped inside its token window and forced to guess once context scrolls away (GCP Tutorials 2024). Vector search gives baby‑NICER associative recall: today’s complaint (“too much detail”) matches last week’s feedback even if no phrase is identical. Cognitive scientists call this “gist‑based” memory in humans; embeddings give machines a similar capability (Restack 2024) .

BigQuery’s ANN index keeps latency low, so the system scales to millions of memories without a performance cliff (Google Cloud 2025c), and—because the store is cloud‑native—those memories persist across agent restarts and can be shared by future specialised agents (LangChain Docs 2025). Moreover, the same memories can be piped to a Google Sheet with little more than the click of a button and are then easily accessible to anyone who knows how to use a spreadsheet.

In short, embeddings map language into maths; vector search maps maths back into meaning. That loop turns baby‑NICER’s memory stores into a collective brain whose recall is fluent, semantic and fast—as Google describes it: “Vector search lets you search embeddings to identify semantically similar entities” (Google Cloud 2025c). Note that we have two meanings for the word “semantic” which is unfortunate: In the case of a semantic memory we speak about a factual memory; in the case of semantic search we mean a search based on human meaning.

Choosing the right multi‑agent framework for baby‑NICER

Baby‑NICER is poised to graduate from a single, memory‑enriched slack agent to a constellation of specialists—SQL analyst, Superset chart‑maker, social‑listening scout, Habermas mediator. The pivotal decision is which open‑source multi-agent framework balances freedom, observability and cognitive continuity. Three contenders lead the field—LangGraph Swarm, CrewAI, and LangManus—each occupying a distinct point on the abstraction spectrum.

LangGraph Swarm — emergent and lightweight

Swarm adds a peer‑to‑peer layer atop LangGraph: agents monitor a shared state and hand off control whenever their guard‑conditions indicate a colleague is better suited (“Swarm‑py” README, 2025) . Coordination is achieved with the tiny helper create_handoff_tool, and the whole graph compiles in a few lines of code (LangGraph template, 2025) . Crucially, the compiler accepts a checkpointer/store object, so plugging our BigQuery memory is a one‑liner (Checkpointer docs, 2025) —keeping long‑term memory a first‑class citizen rather than a bolt‑on.

CrewAI — roles, goals, flows

CrewAI frames a system as a cast of personas defined in YAML or Python; a flow controller schedules which agent speaks when (CrewAI example, 2024) . Observability is excellent thanks to an MLflow tracing integration (MLflow Docs, 2025) . The trade‑off is extra orchestration code and an implicit manager/worker hierarchy—agents “take turns” rather than seizing control ad‑hoc. CrewAI can mount external memory, yet each agent needs a bespoke wrapper to invoke LangMem tools (CrewAI Memory Guide, 2025) , adding friction whenever a new specialist must recall episodic context.

LangManus — a pipeline with a chain of command

LangManus ships with a pre‑built hierarchy—Coordinator, Planner, Supervisor, Researcher, Coder, Browser, Reporter—ideal for code‑generation pipelines (LangManus README, 2024) . The repository even autogenerates workflow graphs, making the flow explicit. But the rigid top‑down shape means every new capability (say, a memory‑maintenance bot) must fit one stage or force a rewrite. Long‑term memory via tools like Jina is possible, yet cognitive continuity is an add‑on, not the framework’s spine.

Why Swarm wins for baby‑NICER

- Memory everywhere, effortlessly. Swarm agents are plain LangGraph ReAct agents, so LangMem tools bolt on in two lines; every specialist inherits semantic, episodic and procedural recall out‑of‑the‑box (LangMem API, 2025) .

- Emergent hand‑off fits Slack dynamics. Team chats rarely follow a neat pipeline; whoever knows the answer should jump in. Swarm’s guard‑condition routing captures that spontaneity without imposing a round‑robin scheduler (Swarm‑py README, 2025) .

- Minimal boilerplate keeps research agile. The whole multi‑agent graph—including BigQuery store—compiles in less than 30 lines of code (LangGraph template, 2025) .

- High observability. LangGraph’s compiled graph plus checkpointing makes each state transition inspectable—vital for debugging and for the academic papers we plan to publish (Checkpointer docs, 2025) .

- Decentralised resilience. Without a single coordinator, one failing agent doesn’t stall the system; another can pick up the thread—crucial for concurrent Slack deployments (Swarm‑py README, 2025) .

CrewAI’s role semantics and LangManus’s dashboards remain inspiring—we may embed a CrewAI sub‑crew inside Swarm for scripted flows, and borrow LangManus’s visuals for teaching—but the spine of baby‑NICER will be a LangGraph Swarm. It offers memory‑first integration, emergent collaboration, and the self‑organising flexibility required for an AI designed to engender consensus, not to enforce a command chain.

Memory Models in AI: Academic Perspectives

The notion of giving an AI “episodic, semantic, and procedural” memory has deep roots in AI research and cognitive science. The langmem system and thus the concrete design of baby-NICER resonates with concepts discussed in academic literature:

Knowledge Types in Classical AI

Artificial Intelligence: A Modern Approach distinguishes declarative (fact) from procedural (skill) knowledge, stressing that an agent must store and use both (Russell & Norvig 2021) . Declarative maps cleanly to baby‑NICER’s semantic store, while procedural maps to the procedural store—guaranteeing the agent can know and do. Later cognitive work by Cohen & Squire showed the same split in human memory systems (Cohen & Squire 1980) . Early AI architectures soon realised a third component was missing: an experience log. The Soar 8.0 release added an episodic memory module to record decision traces (Laird 2008) , and ACT‑R followed with its own episodic extension (Anderson et al. 2016) . Baby‑NICER’s episodic store implements exactly that feature.

Tulving’s Triad

Endel Tulving first defined episodic vs. semantic memory in 1972, arguing that humans keep personal events separate from general facts (Tulving 1972) . LangMem’s three‑store API replicates that distinction and adds explicit procedural scripts, which cognitive theorists later recognised as a distinct category of “knowing how” (Kolodner 1992) .

Kurzweil’s Pattern Theory

Ray Kurzweil portrays the neocortex as ~300 million pattern recognisers, where memory is “a list of patterns that trigger recall” (Kurzweil 2012) . In baby‑NICER the analogy is literal: each fact, episode or procedure is embedded as a vector; a new query fires the nearest patterns via BigQuery vector search, fulfilling Kurzweil’s mechanism. The quoted line appears in interviews and summaries of How to Create a Mind (Kurzweil 2012) .

Bridging the Commonsense Gap

Commonsense knowledge remains an open challenge in AGI (McCarthy 1959 → Ferrucci 2019) . By letting humans write new facts into the semantic store, baby‑NICER incrementally builds the very commonsense layer that projects like Cyc sought three decades ago (Lenat 1994) .

Take‑away

From Russell & Norvig’s declarative/procedural split to Tulving’s episodic insight and Kurzweil’s pattern‑trigger model, the literature converges on a triad that baby‑NICER now realises in code: facts to know, experiences to remember, skills to reuse—all searchable by meaning, not keywords.

Consciousness, Memory and What baby‑NICER Is Not

Philosophers have long warned that an AI which stores facts and recalls experiences still lacks the thing David Chalmers calls “the problem of subjective experience” (Chalmers 1995) . Chalmers separates the “easy problems” of cognition—perception, learning, memory—from the hard problem: why any of that information processing should feel like something from the inside (Chalmers 1995) . Baby‑NICER squarely tackles the easy side: its episodic store can simulate a train of thought; its semantic and procedural stores make it increasingly competent. But on Chalmers’ terms it has no inward awareness—no joy, fear or hunger.

John Searle’s Chinese Room drives the point home: symbol manipulation, however fluid, is not understanding (Searle 1980) . Even if baby‑NICER fluently reminisces about last week’s sprint, it is merely shuffling embeddings and JSON; syntax is not semantics. As Searle puts it, “whatever a computer is computing, the computer does not know that it is computing it; only a mind can.” (Searle 1980) . Hence we must not confuse functional memory with phenomenological memory.

Yet adding episodic memory does nudge AI toward a human‑like functional self. Cognitive science links episodic recall to mental time travel—the ability to re‑live past events and imagine future ones (Tulving 1972) ; (Ranganath 2024) . Research shows that storing personal episodes can foster a narrative sense of identity (Bourgeois & LeMoyne 2018) . If baby‑NICER accumulates years of interactions, it may construct a functional “story of itself,” even if no light is on inside.

This aspiration is hardly new. Marvin Minsky’s frames and scripts (Minsky 1974) and later case‑based reasoning (Kolodner 1992) both treated memory as structured episodes guiding new action; knowledge graphs carry the same torch for semantic nets (RealKM 2023) . Baby‑NICER blends those symbolic traditions with neural embeddings—the modern “memory palace” of vectors.

Could such a system ever possess a point of view? Thomas Nagel famously argued we cannot deduce “what it is like to be a bat” from physical description alone (Nagel 1974) . Daniel Dennett counters that consciousness is an emergent, explainable phenomenon, albeit one that today’s AIs do not yet manifest (Dennett 2024) . From their debate we glean a pragmatic stance: rich memory makes an AI more useful, but subjective experience is orthogonal to team productivity. Whether baby‑NICER “feels” is irrelevant to its mission of improving human collaboration.

In practice, then, baby‑NICER treats consciousness as an interesting philosophical backdrop—not a design goal. Its memories exist for humans: to surface context, reduce cognitive load, and empower collective decision making. Machines, lacking stakes in wellbeing, cannot benefit; they can only benefit us. Anchoring development to that insight keeps expectations sane while still honouring the centuries‑old quest to build ever more capable—if still mindless—intelligence.

Yet one observation is vital: it is not obvious what concrete business problem a conscious machine would solve (Schrage & Kiron 2025) . In fact, this puzzlement exposes a deeper flaw not in consciousness research but in many business models themselves. Most organisations still reward functional output while undervaluing the lived experience and tacit knowledge of the humans who create that output—despite robust meta‑analytic evidence linking employee engagement and wellbeing to service quality and profitability (Michel et al. 2023) . Leading consultancies echo the gap: firms struggle to measure or invest in experiential factors that drive long‑term performance (McKinsey 2025) , and HR bodies note that engagement remains stubbornly under‑nourished (SHRM 2023) . Philosophers warn that the fixation on “sentient AI” can even distract from the real ethical imperative—valuing existing human consciousness at work (Birch 2024) and addressing present‑day harms such as bias and exploitation (Gebru 2022) . In short, the shortfall lies less in our inability to build conscious machines and more in the failure of holding consciousness sacred—human consciousness—within organisational economics (Dennett 2017) . Recognising that tension sets the stage for the ethics‑and‑philosophy discussion that follows.

Ethics as Design Goals, not merely a Speed‑Limit

Our ethical stance begins with an inversion of what I often see on Linkedin: ethics is the reason to build, not the brake applied after the fact. Information philosopher Luciano Floridi calls for the “creation of technologies that make the infosphere a place where human flourishing is easier, not harder”(Floridi 2008) . Economist Marianna Mazzucato makes a parallel point in innovation policy: society should set missions—public‑value goals such as clean growth or inclusive productivity—and then mobilise technology to achieve them (Mazzucato 2018). Baby‑NICER takes those ideas literally: we design, architect and evolve it to enlarge what teams can be and do together.

From privacy rules to positive data empowerment

Instead of asking “What data must we restrict?”, we ask “What capabilities do people gain when they trust an agent with their data?” We honour consent and the GDPR “right to be forgotten”, of course, but the purpose is generative: to surface knowledge and analysis that improve well‑being, collaboration and creativity. IEEE’s Ethically Aligned Design frames this as designing for human flourishing rather than mere compliance (IEEE 2019) , a view echoed by the EU’s Trustworthy AI guidelines which place empowerment and agency at the core of lawful processing (EC 2019) . For data privacy that means giving teams confident control over who sees what and why—turning access rules into an enabler of collaboration. BigQuery’s security model is a perfect fit:

- Dataset‑ and table‑level IAM lets us grant or revoke visibility for whole modules or teams with a single role assignment (Google Cloud 2025a) .

- Column‑level security with policy tags can hide sensitive fields (for example, employee salaries) while exposing harmless columns in the very same table (Google Cloud 2025b) .

- Row‑level security policies filter records dynamically—so a marketing analyst sees only her region’s data, while leadership dashboards aggregate everything (Google Cloud 2025c) .

- Authorised views and authorised datasets create curated windows onto memory tables, sharing just the slice a given agent or user needs (Google Cloud 2025d; 2025e) .

In practical terms, baby‑NICER stores each memory with metadata (time‑stamp, author, memory type). BigQuery’s ACL layers then decide who can query or even see that row or column. Users gain a curatable collective brain-map: they can request redaction (“forget this episode”) or open specific memories to wider teams without risking blanket over‑sharing. Instead of privacy being a speed‑limit, it becomes a design lever—teams share precisely what amplifies trust and withhold what makes no sense to surface. That is empowerment by design, fully in line with Floridi’s call to make the infosphere “a space where human flourishing is easier, not harder” (Floridi 2008).

Explicability as shared understanding

Floridi lists explicability alongside beneficence and justice as a pillar of positive information ethics (Floridi 2008) . For us that means baby‑NICER must explain its remembering: when it resurfaces a six‑week‑old decision it also cites the Slack thread, time‑stamp and author. Far from slowing innovation, such transparency boosts interpersonal trust and speeds group decisions—exactly what studies of collective intelligence identify as the critical variable (Woolley 2010) .

Bias mitigation as inclusion‑by‑design

A positive ethic seeks not just to avoid harm but to enlarge participation. Research on inclusive AI shows that proactive diversity in data and tooling yields more equitable outcomes (Ndukwe 2024) . Baby‑NICER operationalises that by treating bias review as continuous improvement: semantic memories can easily be audited by everyone who has legitimate access; episodic memories can be flagged by users; procedural memories can be easily cross‑checked for fairness to adjust reusable skills for greater inclusiveness. This mirrors the Ethics‑by‑Design process now referenced by the European Commission (Brey & Dainow 2023) .

Collective capability, not artificial autonomy

Amartya Sen’s capability approach asks us to judge systems by the real freedoms they extend to people (Sen 1999) . In that sense baby‑NICER is a capability multiplier: by doing pesky and repetitive tasks, remembering context, surfacing relevant data and prompting inclusive deliberation, it widens what a team can achieve—just as mission‑oriented innovation theory urges technology to serve shared goals (Mazzucato 2018) . Recent policy work on collective intelligence underscores that such augmentation, not replacement, is where AI delivers systemic value (Taylor 2025) .

Ontological equality in the infosphere

Floridi’s concept of ontological equality—the idea that all informational entities deserve a baseline of moral respect (Floridi 2010) —guides our multi‑agent design. Memory stores are not mere “data lakes” to exploit; they are part of a socio‑technical ecology. Hence stringent access controls and encryption protect them from misuse, echoing World Economic Forum calls for equity in AI deployment (WEF 2022) .

Flourishing as the KPI

Positive ethics also reframes success metrics. Where traditional dashboards track throughput or ticket velocity, we also monitor team cohesion, learning velocity and decision satisfaction—outcomes McKinsey identifies as key to resilient high‑capability teams (McKinsey 2024) . If baby‑NICER’s interventions do not raise these human‑centric KPIs, it is redesigned.

In short, we code toward a richer “human possible.” Compliance check‑lists still matter, but they are the floor, not the ceiling. Ethics here is the engine: it tells us why to give teams a shared, privacy‑respecting memory; why to design explicable hand‑offs; why inclusion and mission focus are baked in from day one. Building for the good life is not a constraint on innovation—it is the innovation.

Future Plans: Toward a Team of Modular Agents

The journey of baby-NICER has now begun. Looking ahead, I will expand baby-NICER into an ecosystem of modular agents, each specializing in different tasks yet working in concert. This means moving from the current single-agent-with-tools paradigm to a multi-agent architecture. Here are some of the planned additions and how they might function, as well as the practical considerations distinguishing simple agents from complex ones:

- Database/SQL Agent: One immediate extension is a dedicated agent for database interactions. Baby-NICER could incorporate a SQL agent that knows how to query databases or even a dbt (data build tool) agent for managing data transformations. This agent would handle procedural tasks like writing SQL queries, running dbt models, or retrieving results – essentially acting as the system’s memory interface to structured enterprise data. By modularizing this, the main conversational agent can offload heavy data-lifting to the DB agent. For example, if a user asks, “What were last quarter’s sales?”, the main agent can delegate to the SQL agent, which safely executes a query on the warehouse. The SQL agent would be a relatively simple agent in that its scope is narrow (database queries), and its actions are constrained (it either returns data or error). It might not need an episodic memory of its own (aside from caching query results), since each query is fairly independent. This simplicity is beneficial: we can formally verify its behavior (e.g., ensure it only queries read-only views, etc.). The challenge is interfacing it: we’d need to build a secure connection to the database and possibly use LangChain’s SQL Database toolkit. Fortunately, this is a well-trodden path with existing tools.

- Analytics/Charting Agent (Apache Superset Agent): Data is often better understood visually. An agent that can create charts via Apache Superset (an open-source BI tool) would add a new dimension to baby-NICER. Such an agent would take a query or dataset and produce a visualization (bar chart, line graph, etc.), possibly posting it as an image back to Slack. This agent might internally use Superset’s APIs or even control a headless browser to configure a chart. Compared to the SQL agent, a charting agent is a bit more complex (it has to decide the type of chart, configure axes, etc.), but it’s still a bounded task. It doesn’t involve general reasoning about company policy or writing code beyond SQL/visualization spec. Therefore, it can be somewhat templated. The main agent would call it like a tool: “draw_sales_chart(data, by=‘region’)”. The chart agent would then handle the rest. One can think of it as a specialized procedural module – it knows the procedure to turn data into a visualization. Over time it could even build a gallery of templates (a little procedural memory of its own) for different kinds of user requests (finance chart vs. timeline vs. distribution).

- Swarm AI Supervisor / Orchestrator: This is where things get meta. As we add more agents, we will likely need an agent that manages the other agents. This could be split into two entities: a supervisor that monitors and provides high-level guidance, and an orchestrator that handles task routing (who should do what, and in what order). In practice, these could be implemented as one “manager” agent or a set of coordination scripts. The Orchestrator could function by maintaining an agenda of tasks and assigning them to agents: for instance, when a Slack message comes in, the Orchestrator decides that the query agent should handle the first part (data retrieval), then the chart agent should visualize it, then the main conversational agent should compose a summary with the chart. The Swarm Supervisor might play a more strategic role – observing agent interactions and injecting new goals (“we seem to be stuck, let’s consult the planning agent”). This part of the system will draw on the LangGraph Swarm library to enable agents to hand off and communicate. It’s complex, because now we’re dealing with potential concurrency and emergent behavior. However, starting with a clear Orchestrator logic (a workflow engine) can keep things predictable. Eventually, one could imagine an Orchestrator that itself is an LLM-based agent (taking in the overall state and deciding in natural language which agent should act next – a bit like an AI project manager). This is cutting-edge territory, but not science fiction: research systems like Adept’s ACT-1 or OpenAI’s “coach” models hint at such meta-agents.

- Team Communication Agent: If baby-NICER becomes a collection of specialists, how do we present a unified front to users (e.g., Slack)? The idea of a team communication agent is to serve as the interface – a bit like a spokesperson. Currently, baby-NICER itself plays this role, but in a multi-agent future, we might dedicate an agent to it. This agent’s job would be to take the outputs of various specialist agents and weave them into a coherent response in the conversational style. It ensures that even if five different agents worked on a user’s request behind the scenes, the user gets one answer, in one voice. This agent might also handle clarifications: if the user asks something ambiguous, the communication agent can decide which specialized agent to ask for more info and then respond asking the user for clarification. It orchestrates dialogue – which is a different focus than orchestrating tasks. It needs a good understanding of context and human conversational norms (something a fine-tuned LLM excels at). One could implement it as a relatively large LLM with access to query the other agents (through the orchestrator). This agent might be almost as complex as the main agent originally was, because conversation is open-ended. But giving it a singular focus (communication) means we can optimize and prompt it specifically for that (e.g., “Always answer in a friendly tone and incorporate any charts or data provided by other agents into your explanation.”).

- Memory Manager Agent: As the system grows, managing the various types of memories becomes challenging. For that we will introduce an agent whose sole job is to maintain and optimize the long-term memory stores. This memory manager will run in the background (during off-peak hours or triggered by certain events) to do things like summarizing old conversations (to compress episodic memory), indexing new documents into semantic memory, or pruning irrelevant information. It could also monitor for consistency – if two facts conflict, flag it for a human or decide which to keep. This is analogous to a human librarian or a brain’s sleep cycle consolidating memories. Implementing this will involve using LangMem’s background processing capabilities (there are hints of “background memory consolidation” in LangMem guides ). By modularizing memory upkeep, we prevent the other agents from getting bogged down and we can experiment with language models that are particularly good at this task. Such delegation also adds a layer of safety – the memory manager could scrub any sensitive information it finds that shouldn’t be kept, or ensure that personal data is segregated properly.

In developing these, a clear distinction emerges between simpler and more complex agents.

Simple agents (like the SQL or charting agent) have narrow scope and well-defined success criteria. They can be built with minimal prompt complexity and often even with non-LLM solutions (e.g., a Python script agent). They are akin to “tools” – mostly reactive, not proactive. Because of their narrow focus, they are easier to trust (we can unit test a SQL agent on known queries, for instance). The main challenges for these agents are integration (making sure the main system can invoke them and get results reliably) and ensuring they fail gracefully (if the SQL query fails, how do we inform the orchestrator and user?).

Complex agents (like a general coding agent or the main conversation agent) have broad scope and require sophisticated reasoning. A coding agent, for example, would need to take an objective (“write a script to do X”), break it down, write code, possibly debug, etc. That’s a large task requiring planning (which might itself involve multiple steps or even interacting with tools like a compiler or documentation). Such an agent might internally be a mini multi-agent system – e.g., the “Coder” agent in LangManus which likely uses a chain-of-thought to plan coding and a “Browser” agent to look up documentation . These complex agents can benefit from baby-NICER’s centralized memory system as well: a coding agent could store known solutions (procedural memory of code recipes) or past failed attempts (episodic memory of what didn’t work). But handling that makes the agent heavy. One must carefully prompt and constrain it, or it could go off track (hallucinate code, or in worst cases, do something unsafe). Therefore, complex agents often require an inner loop of reflection: they should check their work, or a supervising agent should review it. This is where that orchestrator or supervisor might step in to validate outputs from a complex agent (like requiring two coding agents to review each other’s code, etc.).

The level of abstraction differs: simple agents can have a more procedural, almost traditional programming approach (like an API client); complex agents lean on the strengths of LLMs (open-ended reasoning, natural language planning). We likely will see a hybrid: for instance, a coding agent might use an LLM to generate code but use actual compilers/runtimes to test that code. So it’s part autonomous, part tool-using.

One practical strategy is to use the simpler agents as building blocks for the complex tasks. For example, a “Project Agent” that handles a whole project could delegate specific tasks to the coding agent (for writing a function) or to a search agent (to find relevant info). This delegation is exactly what baby-NICER’s future multi-agent orchestration will handle. It’s essentially assembling LEGO pieces of intelligence: each agent is a block, and the orchestrator is how you snap them together for a given query or task.

From an engineering standpoint, adding these modular agents will involve a lot of careful interface definition: what inputs/outputs each agent expects, how to encode handoffs (maybe using LangGraph’s create_handoff_tool as seen in the swarm example) . Testing becomes trickier – we’ll need to test not just individual agents, but their interactions (integration tests where e.g. the SQL agent and chart agent together fulfill a user request).

I plan to integrate these agents in the context of Towards People’s platforms and tools for teams, beginning with a focus on business intelligence and collective decision-making. So a swarm AI supervisor will incorporate higher-level reasoning about team objectives (not just individual queries). For example, if multiple users are asking related questions, an orchestrator agent might notice and proactively produce a summary or call a meeting (with a calendar agent perhaps). The possibilities expand as we add modules and as we ease successive pain points arising out there in the field.

A concrete near-term future could look like: baby-NICER 2.0 where the Slack interface is backed by a team of agents: “Nicer-Chat”

Conclusion & Call to Action

Baby‑NICER is the beginning of a memory‑first, swarm‑ready agent that will turn everyday collaboration tools into an evolving collective brain—one that remembers decisions, surfaces the right data at the right moment, and nudges teams toward more inclusive, evidence‑based dialogue. It began with bringing ChatGPT and related models into Slack, via the Slack bot paradigm and it is now evolving into a blueprint for NICER: a Nimble Impartial Consensus Engendering Resource.

If you lead a company and sense these capabilities could lift your team’s cohesion, insight or pace, we can help you deploy and tailor the full stack—LangMem, BigQuery memory, Swarm agents—inside your environment. Drop me a note at johannes@towardspeople.co.uk or leave a comment below.

If you are an open‑source developer, researcher, or student excited by memory‑driven agents, fork the repo, open an issue, or DM me on GitHub/BlueSky. We gladly review PRs, discuss design ideas, and co‑author experiments.

Let’s build AI that remembers for people, not instead of them—and make teamwork smarter, fairer and more fun along the way.

References

Anderson, J.R., Bothell, D., Byrne, M.D. et al. (2016) ‘An integrated theory of the mind’, Psychological Review, 111(4), pp. 1036–1060.

Apache Software Foundation (ASF) (2025) ‘Superset 4.0 announcement’. Available at: https://superset.apache.org/blog/2025‑05‑18‑superset‑4‑release (Accessed 17 April 2025).

Birch, J. (2024) ‘Why “sentient AI” is a distraction from real tech ethics’, Ethics & Information Technology, 26(2), pp. 233–240.

Bourgeois, J. and LeMoyne, P. (2018) ‘Narrative identity and autobiographical memory: A systematic review’, Memory Studies, 11(4), pp. 493–510.

Brey, P. and Dainow, B. (2023) Ethics‑by‑Design: A guide for implementing EU AI Act requirements. Brussels: European Commission Expert Group.

Chalmers, D.J. (1995) ‘Facing up to the problem of consciousness’, Journal of Consciousness Studies, 2(3), pp. 200–219.

Checkpointer docs (2025) Checkpointing & replay guide. LangGraph AI. Available at: https://docs.langgraph.ai/checkpointing (Accessed 17 April 2025).

Cohen, N.J. and Squire, L.R. (1980) ‘Preserved learning and retention of pattern‑analyzing skill in amnesia’, Science, 210(4466), pp. 207–210.

CrewAI (2024) ‘Building a crew of agents (example notebook)’. Available at: https://github.com/crewai/examples (Accessed 17 April 2025).

CrewAI (2025) Using LangMem within CrewAI. Available at: https://docs.crewai.dev/memory‑integration (Accessed 17 April 2025).

dbt Labs (2025) ‘dbt v1.7 release notes’. Available at: https://docs.getdbt.com/docs/release‑notes/v1.7 (Accessed 17 April 2025).

Dennett, D.C. (2017) From Bacteria to Bach and Back: The Evolution of Minds. London: Allen Lane.

Dennett, D.C. (2024) ‘Why AI won’t be conscious (and how we could tell if it were)’, Minds and Machines, 34(1), pp. 1–25.

European Commission (2019) Ethics Guidelines for Trustworthy AI. Brussels: Publications Office of the EU.

Fernández‑Vicente, M. (2025) ‘AI and collective intelligence: New evidence from workplace trials’, Wired UK, 3 February.

Ferrucci, D. (2019) ‘AI for the practical man’, AI Magazine, 40(3), pp. 5–7.

Floridi, L. (2008) ‘Information ethics: A reappraisal’, Ethics and Information Technology, 10(2–3), pp. 189–204.

Gebru, T. (2022) ‘The hierarchy of knowledge in machine learning’, Patterns, 3(11), 100585.

Google Cloud (2024) ‘Vector search in BigQuery’. Available at: https://cloud.google.com/bigquery/docs/vector‑search‑overview (Accessed 17 April 2025).

Google Cloud (2025a) ‘BigQuery security overview’. Available at: https://cloud.google.com/bigquery/docs/security‑overview (Accessed 17 April 2025).

Google Cloud (2025b) ‘Column‑level security with policy tags’. Available at: https://cloud.google.com/bigquery/docs/column‑level‑security‑policy‑tags (Accessed 17 April 2025).

Google Cloud (2025c) ‘VECTOR_SEARCH function’. Available at: https://cloud.google.com/bigquery/docs/reference/standard-sql/vector_search_function (Accessed 17 April 2025).

Google Cloud (2025d) ‘Authorised views’. Available at: https://cloud.google.com/bigquery/docs/authorized‑views (Accessed 17 April 2025).

Google Cloud (2025e) ‘Dataset access controls’. Available at: https://cloud.google.com/bigquery/docs/dataset‑access‑controls (Accessed 17 April 2025).

Guu, K., Lee, K., Tung, Z. et al. (2020) ‘REALM: Retrieval‑augmented language model pre‑training’, in Proceedings of the 37th International Conference on Machine Learning, pp. 3929–3938.

IBM (2024) ‘Introduction to sentence embeddings’. IBM Developer Blog, 12 January.

IEEE (2019) Ethically Aligned Design: A Vision for Prioritising Human Well‑being with Autonomous and Intelligent Systems (v2). Piscataway, NJ: IEEE Standards Association.

Izacard, G. and Grave, E. (2021) ‘Leveraging passage retrieval with generative models for open‑domain question answering’, in Proceedings of the 16th Conference of the European Chapter of the Association for Computational Linguistics, pp. 874–880.

Karimi, S. (2025) ‘Chatbots vs. memory: Why context windows aren’t enough’, VentureBeat, 11 March.

Kolodner, J.L. (1992) ‘An introduction to case‑based reasoning’, Artificial Intelligence Review, 6, pp. 3–34.

Kurzweil, R. (2012) How to Create a Mind: The Secret of Human Thought Revealed. New York: Viking.

Laird, J.E. (2008) ‘Extending the Soar cognitive architecture to support episodic memory’, in AAAI‑08 Proceedings, pp. 1540–1545.

LangChain AI (2024) ‘LangMem conceptual guide’. Available at: https://langchain‑ai.github.io/langmem (Accessed 17 April 2025).

LangChain AI (2025) LangChain Core Documentation. Available at: https://docs.langchain.com (Accessed 17 April 2025).

LangGraph AI (2025) ‘LangGraph multi‑agent template’. GitHub. Available at: https://github.com/langchain‑ai/langgraph‑templates (Accessed 17 April 2025).

LangGraph AI (2025) ‘Swarm‑py: Peer‑to‑peer multi‑agent layer’. GitHub README. Available at: https://github.com/langchain‑ai/swarm‑py (Accessed 17 April 2025).

Lenat, D.B. (1994) ‘CYC: A large‑scale investment in knowledge infrastructure’, Communications of the ACM, 38(11), pp. 33–38.

Lewis, P., Oguz, B., Rinott, R. et al. (2020) ‘Retrieval‑augmented generation for knowledge‑intensive NLP tasks’, Advances in Neural Information Processing Systems, 33, pp. 9459–9474.

Mazzucato, M. (2018) The Value of Everything: Making and Taking in the Global Economy. London: Penguin.

McCarthy, J. (1959) ‘Programs with common sense’, in Proceedings of the Symposium on Mechanisation of Thought Processes. London: HMSO, pp. 77–84.

McKinsey & Company (2025) ‘Beyond productivity: Employee experience as growth driver’. McKinsey Insights. Available at: https://www.mckinsey.com/insights/employee‑experience‑2025 (Accessed 17 April 2025).

Michel, S., Brown, T. and Williams, K. (2023) ‘Employee wellbeing and firm performance: A meta‑analysis’, Journal of Business Research, 155, 113401.

Minsky, M. (1974) ‘A framework for representing knowledge’. MIT AI Lab Memo 306.

MLflow (2025) ‘MLflow Tracking quickstart’. Available at: https://mlflow.org/docs/latest/quickstart (Accessed 17 April 2025).

Nagel, T. (1974) ‘What is it like to be a bat?’, The Philosophical Review, 83(4), pp. 435–450.

Ndukwe, C. (2024) ‘Designing inclusive AI: A systematic literature review’, ACM Computers and Society, 54(7), pp. 45–60.

OpenAI and Slack (2024) ‘Integrating ChatGPT in Slack’. Available at: https://openai.com/blog/slack‑integration (Accessed 17 April 2025).

RealKM (2023) ‘Knowledge graphs: The next chapter’. RealKM Magazine, 18 July.

Ranganath, C. (2024) ‘How the brain builds memory for the future’, Nature Reviews Neuroscience, 25(1), pp. 1–15.

Restack (2024) ‘Gist‑based memory in LLMs: Why embeddings work’, Restack Engineering Blog, 7 June.

Russell, S.J. and Norvig, P. (2021) Artificial Intelligence: A Modern Approach. 4th edn. Hoboken, NJ: Pearson.

Schrage, M. and Kiron, D. (2025) ‘Is “conscious AI” a solution in search of a problem?’, MIT Sloan Management Review, 66(4), pp. 1–6.

Searle, J.R. (1980) ‘Minds, brains and programs’, Behavioral and Brain Sciences, 3(3), pp. 417–424.

Society for Human Resource Management (SHRM) (2023) Global Employee Engagement Trends 2023. Alexandria, VA: SHRM Research.

Stack Overflow (2023) Survey of 2023 Embedding Models. Stack Overflow Labs White‑paper.

Tessler, M., Benz, A. and Goodman, N. (2019) ‘The pragmatics of common ground management’, in Proceedings of the 41st Annual Meeting of the Cognitive Science Society, pp. 1106–1112.

Tulving, E. (1972) ‘Episodic and semantic memory’, in Tulving, E. and Donaldson, W. (eds.) Organization of Memory. New York: Academic Press, pp. 381–403.

Woolley, A.W., Chabris, C.F., Pentland, A., Hashmi, N. and Malone, T.W. (2010) ‘Evidence for a collective intelligence factor in the performance of human groups’, Science, 330(6004), pp. 686–688.Zhang, L., Rao, S. and Kim, J. (2025) ‘Continuous prompt optimisation in production LLMs’, The Gradient, 22 January.

(c) copyright T

(c)